MaxDiff – short for Maximum Difference Scaling and often called Best–Worst Scaling – is a robust survey technique that ranks a long list of items (features, benefits, messages, claims, services, or value propositions) by relative importance. It’s ideal for B2B research when you need to cut through stakeholder opinions and identify what really moves decision makers.

Why MaxDiff for B2B?

B2B decisions are complex: multiple stakeholders, long cycles, and niche products. Traditional rating scales (e.g., 1–10 importance) often produce inflated scores with little differentiation. MaxDiff forces trade offs, revealing the true hierarchy of priorities. Use it to:

- Prioritize product features for your roadmap

- Refine value propositions and messaging

- Select packaging or service bundles

- Focus sales collateral on claims that matter

- Inform pricing and tiering by what buyers won’t compromise on

How MaxDiff works

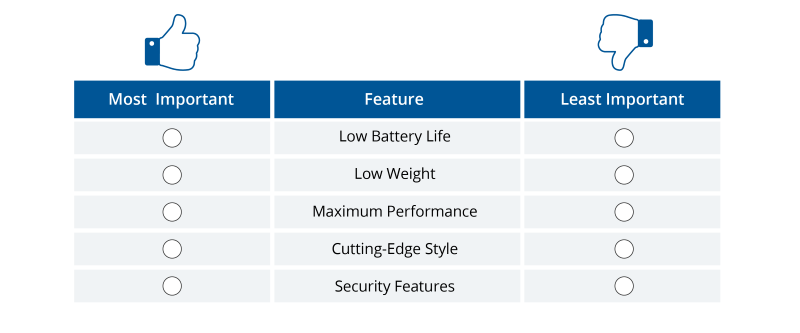

Respondents see short sets – typically 4 or 5 items at a time – from your longer list (say 15–30 items). For each set, they pick the “Best” (most important) and “Worst” (least important) item. After 10–15 such tasks, we analyze their choices using a choice model (commonly multinomial logit or hierarchical Bayes) to estimate utilities for every item. Those utilities are rescaled to a 0–100 score so you get a clear ranking, the distance between items, and easy-to-share visuals.

Example task (one screen):

Which is MOST important and LEAST important when choosing an industrial IoT platform?

- Real time monitoring dashboards

- ISO/IEC 27001 certified security

- Open APIs & developer ecosystem

- 24/7 dedicated technical support

Select Most important and Least important.

What you get from a MaxDiff study

- Ranked list of items with relative scores (e.g., ISO 27001 security = 100, Open APIs = 82, 24/7 support = 65, dashboards = 43)

- Meaningful gaps (not just “everything is an 8/10”)

- Segment cuts by role, industry, company size, or region – see how CTOs differ from Procurement

- Shortlist recommendations (e.g., top 5 claims to emphasize)

- Optional Anchored MaxDiff to map scores to absolute importance (e.g., “must have” vs. “nice to have”)

Good design principles (so the data stands up to scrutiny)

- Item list size: 12–30 items is common; prune duplicates and overlap before fieldwork.

- Items per set: 4–5 is a sweet spot (reduces cognitive load while enabling trade offs).

- Number of tasks per respondent: 10–15 keeps surveys efficient (typically ~8–10 minutes).

- Exposure balance: Aim for each item appearing 3–4 times across tasks with randomized, balanced designs.

- Sample size: In B2B, n=150–400 completes often provide stable estimates; go higher for granular segment analysis.

- Wording: Use clear, mutually exclusive items; avoid compound or ambiguous statements.

Interpreting MaxDiff scores

- Scores are relative: A score of 100 isn’t “perfect” – it’s the highest within your study. If “Security” scores 100 and “Open APIs” scores 82, security is ~22% more important relative to APIs.

- Spacing matters: Large gaps indicate clear priorities; small gaps suggest items are interchangeable.

- Segment strategy: If Procurement ranks “Total Cost of Ownership” high but IT ranks “Open APIs” high, you have a blueprint for role specific messaging and sales plays.

When MaxDiff is (and isn’t) the right tool

Use MaxDiff when:

- You need a prioritized list of features, benefits, or messages

- You’re choosing which claims to put on the homepage or in sales decks

- You want evidence based bundling and tiering decisions

Consider alternatives when:

- You need trade offs between multi attribute product profiles (use Conjoint/Choice based Conjoint)

- You want to estimate price sensitivity (use pricing research techniques)

- You’re mapping market coverage across items (use TURF analysis alongside MaxDiff)

Practical tips for B2B researchers

- Start with a crisp hypothesis: What decisions will the ranking inform? Roadmap, messaging, packaging, or sales enablement?

- Pre workshop your item list: Align Product, Sales, and Marketing to avoid lookalike items that muddy results.

- Plan segment cuts early: Ensure quotas reflect the segments you care about (e.g., IT vs. Procurement, SMB vs. Enterprise).

- Build a delivery narrative: Pair the ranking with recommendations – which 5 claims go into your next ABM campaign, which 3 features go into the MVP, and which items drop.

FAQs: MaxDiff for B2B market research

What’s the difference between MaxDiff and simple rating scales?

MaxDiff forces trade offs, producing a clear hierarchy. Ratings often inflate everything and struggle to prioritize.

Can MaxDiff handle technical B2B topics?

Yes. With careful wording and balanced designs, it works well for complex features, compliance claims, and service attributes.

How many respondents do I need?

For stable overall rankings, 150–400 completes often suffice in B2B; increase sample for deep segment breaks.

Can I get absolute importance, not just relative scores?

Yes – Anchored MaxDiff adds a calibration step to interpret whether an item is “must have” vs. “nice to have.”